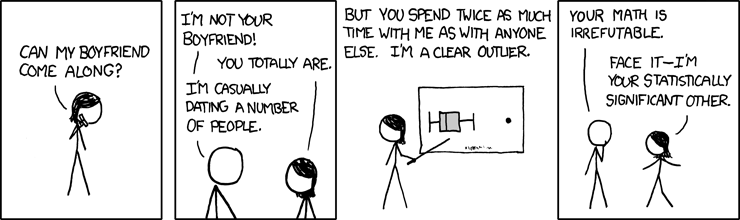

Statistical Significance. It’s so basic a concept that many modelers and statisticians don’t look for it in simpler analyses such as marketing campaign comparisons, population distributions and so forth. Nevertheless, statistical significance can make all the difference (pun fully intended) in whether results are jaw-dropping or trash-worthy. And when speaking with the business-side of the house, you’d better know which is which.

Statistical Significance. It’s so basic a concept that many modelers and statisticians don’t look for it in simpler analyses such as marketing campaign comparisons, population distributions and so forth. Nevertheless, statistical significance can make all the difference (pun fully intended) in whether results are jaw-dropping or trash-worthy. And when speaking with the business-side of the house, you’d better know which is which.

Tip for Translation: Less Insignificant Info is More

First of all, check for significance. That could go without saying… but it doesn’t (see above). If the results are not significant then do not put them out there for the business to jump all over… which they will. If they are close to significant and you want to share them, add a caveat/footnote indicating that the results may be the result of random variation. Do not use technical terms here.

Two common times that analysts try to give flack about this:

“Yeah, but my tests always appear significant because I have enough data that even tiny differences get picked up due to the sample sizes.”

Try bootstrapping smaller samples for comparison and see what happens. If you are still getting significant results, good on ya’. If not, maybe the results weren’t as significant to start with.

“But the business impact of this minuscule difference is huge so therefore it doesn’t matter if the result is significant.”

This is actually even more reason to validate your results. Presumably, if the resulting teeny difference would cause a major upheaval for the business, so too would a minor variation due to natural fluctuation. Use a significance level that aligns with the importance of finding a difference. For a super-important analysis, go with an alpha of .01 or even .001 rather than the usual .05.

To Show or Not to Show Statistical Significance…

More often than not, business stakeholders only want to see results that are significant. They want to know how the analysis can be used to better effect. In general, that does not mean that you go around flaunting a p-value. Just state the results and how to use them and move on. On the other hand, it is usually informative and interesting to business users to see statistically insignificant results when it confirms or debunks a long-held hypothesis.

For example, let’s say that there is a “gut feel” that customers who buy diapers also buy beer. After doing some testing on purchase data, you find that there is no significant link between these two product categories. The business stakeholders (and holders of the gut feeling) would likely need to know that these two items are not correlated. It impacts store and display layouts for the future.

Got some great examples of significant results that really weren’t? Or times when instincts were proven right/wrong? I’d love to hear all about your adventures with statistical significance in the comments.

One of the most difficult parts of any analyst’s job is packaging and presenting analytics work for a

One of the most difficult parts of any analyst’s job is packaging and presenting analytics work for a

Slicing data into manageable chunks for viewing is crucial when you start dealing with more records than will fit in something like Excel (without PowerPivot, of course). One of the most common ways to look at data in a more easily-digestible manner is to use percentiles, or some derivative thereof, to group records based on a ranking. This allows you to then compare equal-sized groups to one another in order to form conclusions as to relative behavior.

Slicing data into manageable chunks for viewing is crucial when you start dealing with more records than will fit in something like Excel (without PowerPivot, of course). One of the most common ways to look at data in a more easily-digestible manner is to use percentiles, or some derivative thereof, to group records based on a ranking. This allows you to then compare equal-sized groups to one another in order to form conclusions as to relative behavior. Recently, in speaking with the President of Kobie regarding my team, I used the term “data munging” to describe a lot of the work that we do. He laughed, thinking I had said “data munching” (mmmm, tasty!) and asked if that was a technical term. The short answer is that yes, it is another term for data wrangling (which, incidentally, is one of my favorite terms in the industry).

Recently, in speaking with the President of Kobie regarding my team, I used the term “data munging” to describe a lot of the work that we do. He laughed, thinking I had said “data munching” (mmmm, tasty!) and asked if that was a technical term. The short answer is that yes, it is another term for data wrangling (which, incidentally, is one of my favorite terms in the industry). This one had been bugging me for a while now. There are a lot of analyses where it is useful to select multiple random groups. Usually, this would involve picking a bunch of numbers out of your head and trying them as the seed values (I like using phone numbers without the area codes – then I can call the person and tell them they rocked my randomization).

This one had been bugging me for a while now. There are a lot of analyses where it is useful to select multiple random groups. Usually, this would involve picking a bunch of numbers out of your head and trying them as the seed values (I like using phone numbers without the area codes – then I can call the person and tell them they rocked my randomization).