The Promise of Remarkable 2

Remarkable 2 is touted as a paper-like experience to replace notebooks and printed documents. This is a simple, sleek device trying to solve a basic problem.

The key features they call out are

- eye-friendly screen,

- paper-like feel,

- conversion of handwriting to text,

- organization across devices, and

- taking notes or writing on documents.

It even highlights the lack of other functionality as a benefit – no distractions you might otherwise get from using a conventional tablet for similar purposes.

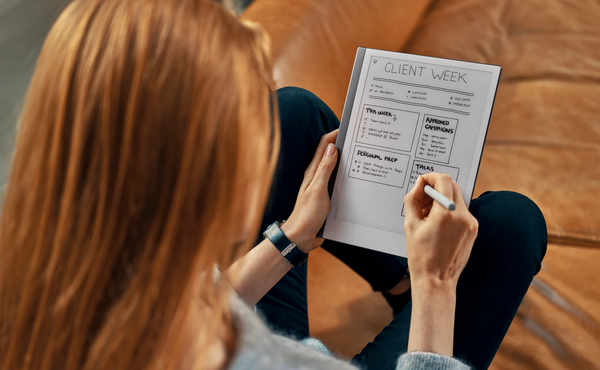

As an avid note-taker and adherent of paper-and-pen specifically, I was immediately interested in the possibility of converging on one device. Prior to this, I’ve been using the LiveScribe smart pen and its associated dot paper for most of my work, learning, and research note taking. This led to a proliferation of notebooks on my desk (or in my backpack) at any given time due to my using one notebook per topic and hand cramps due to the weight of the pen itself.

I also keep a paper bullet journal that I hope to digitize. I spend quite a bit of time setting up new pages at the start of the month. Having something where I can just duplicate the page and then erase would be fantastic. This journal is also a place I feel more creative – using colored pencils, decorative tapes, stickers, stencils, etc. Drawing is not my forte so we’ll see how this goes.

Review of the Remarkable 2

The Remarkable 2 writing tablet arrived about a week ago. The required marker/stylus is sold separately. I opted for the slightly fancier one that includes built-in eraser capability. That means $99 rather than $49 so I can flip the marker over to delete mistakes. I also got the book-style folio in grey, polymer weave. This allows me to quickly flip open the cover rather than trying to dig the tablet out of a pocket-style holder. Both of these seem worth it to me as I use this thing a lot and they provide some quality of life improvements over the less schmancy options.

The big question – does Remarkable 2 feel like paper? No. Sorry, I’m a purist. This is still too “smooth” to feel like paper to me. It’s good enough, I think, for my trying to take notes and retain information for reference.

The form factor is good. I’d prefer a slightly larger screen size, closer to Letter paper, if I could. Not a deal breaker. The marker is comfortable to hold. Its magnetic side allows it to easily snap to the side of the tablet for storage. That’s certainly helpful so you don’t lose a very expensive piece of the kit.

Getting started was pretty quick. The tablet is touchscreen or you can use the marker to navigate. Just press the one button on top to turn it on and start using it. First step is to connect to the internet. One con here is that it syncs to the Remarkable cloud host. This makes me a little nervous with respect to privacy. Could be my own paranoia at play but I’ll be cautious about sensitive information I write down on this.

Remarkable allows for folder structure, including nesting folders, with notebooks within them and pages within those. You can move notebooks, pages, or folders around if you need to later. When creating a notebook, you specify the default page template (lined, planner, grid, music staff, etc). You can change the template at any time for any page.

Creating a new page is as easy as swiping from the right. I will say that this has happened accidentally a couple of times as my hand rested on the screen while writing. The new page will inherit the last used page template and marker style.

Pages have the ability to have multiple layers even above the template. I’ve not played with this much just based on my usage pattern. Could see it being useful for diagramming and such.

The marker itself is not electronic. This is something I appreciate versus electronic pens such as Apple Pencil. In fact, my first encounter with an Apple Pencil was someone complaining that their Pencil was running out of charge. That’s not an issue here.

Writing is smooth but takes a little getting used to. The ink has a slight lag, which is initially off-putting. It took a couple of days to not notice. It’s also a little pixelated, even when using the paintbrush or calligraphy marker styles, and slightly off of where I feel the marker tip is. Not bad, but noticeable and seems worse at the bottom of the screen. Similarly, the eraser seems to have a larger footprint than I’d think based on where the marker touches the screen.

The device has 8GB of internal storage, only 6.4GB of which is available due to OS usage. In one week I’ve filled about 0.4GB of this. Not sure what happens when I run out… Guess I’ll come to that in a few months. Presumably I’ll need to convert some stuff to PDF and move it off to another service. I’ve not yet downloaded the desktop app to see how managing from there works. I can follow up if there’s interest on this.

A recap of sorts on features – available and lacking

Remarkable 2 Features I’m Enjoying (Available):

- Templates – The templates give you essentially a page layout. There are a bunch to choose from like grid, dot, lined, planner, checklist, storyboard, etc. Once you pick a template, it is automatically applied to subsequent pages generated in the notebook.

- Marker Style – You can choose how the ink appears when you write. For most marker styles, the pressure and angle of the marker will then vary the line. I’m not creative enough to use this well, but I like being able to pick the one I want.

- Organization Hierarchies – The ability to nest folders and notebooks and such and move them around is helpful given the inability to search within the handwritten notes. You can also move pages around between notebooks which can help if you put a bunch of stuff in Quick Notes and then need to classify.

- Send to Remarkable from Web – there’s a browser add-on that allows you to send articles from websites to the tablet as PDFs. This is a nice one for me as I tend to open a lot of tabs for articles I want to read eventually. I don’t prefer to read them on my laptop screen so this acts like a Kindle for articles.

- Convert to text and send – the handwriting recognition works pretty well. The ability to convert directly on the device and then send is a benefit over some others tools where you first have to open an app.

- Focus – when I look at the Remarkable 2, it does not try to remind me of things. There are no notifications. There are no messages coming in. It just takes the notes – and therefore keeps me more focused on the content at hand.

- Swipe to Create New Page – it’s super simple and vaguely mimics turning a physical page. Also nice that it remembers the last settings.

- Portrait vs Landscape – you can set the device to landscape rather than portrait mode (or just turn it and continue writing. This was helpful for me when quickly adding an architecture diagram in the middle of other notes.

Features I Wish Remarkable 2 Had (Lacking):

- Search within handwritten notes – you can search folder or notebook names, PDFs or eBooks on the device but not your actual notes. There is a feature to “Convert to Text and Send” but this means it’s no longer on the device to search.

- Name, label, or tag pages – I use this to capture notes on all sorts of things and like having similarly-themed notes in one notebook. However, I would also like to be able to quickly browse within the notebook for specific information. Being able to at least tag or name pages would help a ton, especially as the notebook grows.

- Specify default pen style – mine defaults to “Mechanical Pencil” whereas I prefer “Fineliner”. Once selected in a notebook, the device remembers what was last used and continues to use that until changed.

- Convert to text and leave on the device – there’s a feature to convert to text and send… but not one that just converts to text. Seems weird and limits searchability.

- Ability to tag a place in notes – I use certain symbols to denote things like outstanding questions, action items, or important notes. Being able to indicate where these are (since I cannot search for them) would be a big help.

- Sync with other tools – The only means of getting notes out of Remarkable is to send by email, convert to text and send, or convert to PDF, PNG, or SVG on the desktop app and then push to other tools (e.g. Google Drive, Dropbox, Evernote, OneNote, etc.)

- Back Button or Quick Link Assignment – the quick links menu in the top swipe allow for the creation of a new folder, notebook, or quick sheet or search for something else. I find myself often wanting to go back to a specific other notebook and wish there was a way to jump from here to “Last Opened” or assign a bookmark.

- Fully hide the nav button from screen – while in a notebook page, there is a little circle in the upper left corner that allows you to open the navigation and settings. That makes the corner unavailable for writing and often causes the nav to pop up when not needed.

Final Thoughts on Remarkable 2

This is a sleek way to take notes and I think that it will suffice to replace the standard notebooks I’ve had accumulating on my desk. The one paper notebook I will likely continue to keep is my journal – but in an altered fashion. I used to have all manner of information tucked into the journal like my pets’ vaccination info or my standard work trip packing list. These could easily migrate to the Remarkable 2. My monthly and daily journal pages, though, really do suffer without some color and pizzazz. I miss my pencils already.

If I were to have a rating system, I’d say the Remarkable 2 would get about a 3/5.

What happens when you’ve positioned your business as a trusted, community member, but your customer community goes elsewhere when it’s time to make a purchase?

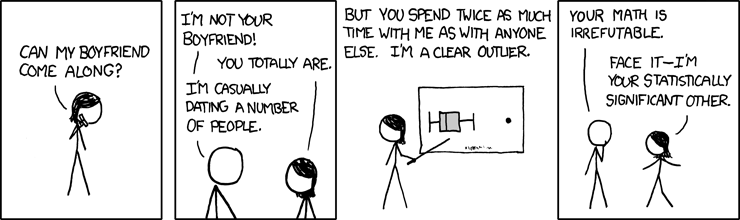

What happens when you’ve positioned your business as a trusted, community member, but your customer community goes elsewhere when it’s time to make a purchase? One of the most difficult parts of any analyst’s job is packaging and presenting analytics work for a

One of the most difficult parts of any analyst’s job is packaging and presenting analytics work for a  This picture is definitely not me. But as this is my first post regarding Hive, I felt the need to include a photo of a ridiculous beehive hairdo.

This picture is definitely not me. But as this is my first post regarding Hive, I felt the need to include a photo of a ridiculous beehive hairdo. This one has been irking me for quite a bit. If you have to insert multiple rows into a table from a list or something, you may be tempted to use the standard PostgreSQL method of…

This one has been irking me for quite a bit. If you have to insert multiple rows into a table from a list or something, you may be tempted to use the standard PostgreSQL method of… Netezza is a super-fast platform for databases… once you have data on it. Somehow, getting the data to the server always seems like a bit of a hassle (admittedly, not as big a hassle as old school punchcards). If you’re using Netezza, you’re probably part of a large organization that may also have some hefty ETL tools that can do the transfer. But if you’re not personally part of the team that does ETL, yet still need to put data onto Netezza, you’ve got to find another way. The EXTERNAL TABLE functionality may just be the solution for you.

Netezza is a super-fast platform for databases… once you have data on it. Somehow, getting the data to the server always seems like a bit of a hassle (admittedly, not as big a hassle as old school punchcards). If you’re using Netezza, you’re probably part of a large organization that may also have some hefty ETL tools that can do the transfer. But if you’re not personally part of the team that does ETL, yet still need to put data onto Netezza, you’ve got to find another way. The EXTERNAL TABLE functionality may just be the solution for you.

According to

According to